GaLore

Gradient Low-Rank Projection (GaLore) is a memory-efficient training technique designed for modern optimizers. It reduces the memory footprint of training by projecting gradients into a low-dimensional subspace, minimizing the need to store large optimizer statistics. GaLore makes it possible to train Llama 8B from scratch on a single RTX 4090 GPU (24GB), significantly lowering the hardware barrier for LLM training.

GaLore has received broad media coverage and adoption across the open-source community:

- Featured on HuggingFace Blog.

- Covered by 机器之心 (Synced).

- Explained in a visual format in AICoffeeBreak.

- Integrated into frameworks like LLaMA-Factory and Axolotl, and adopted by TensorOpera.

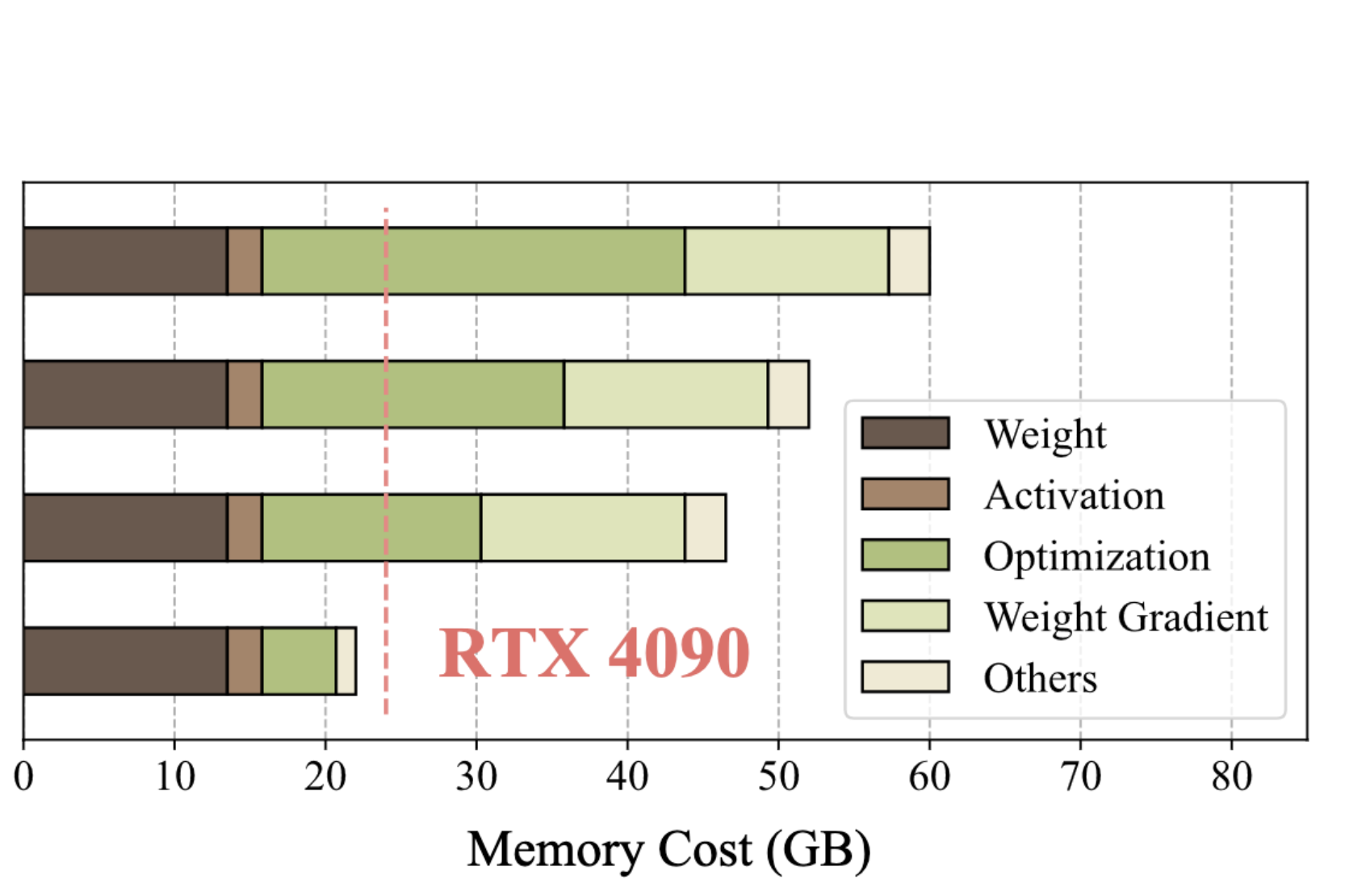

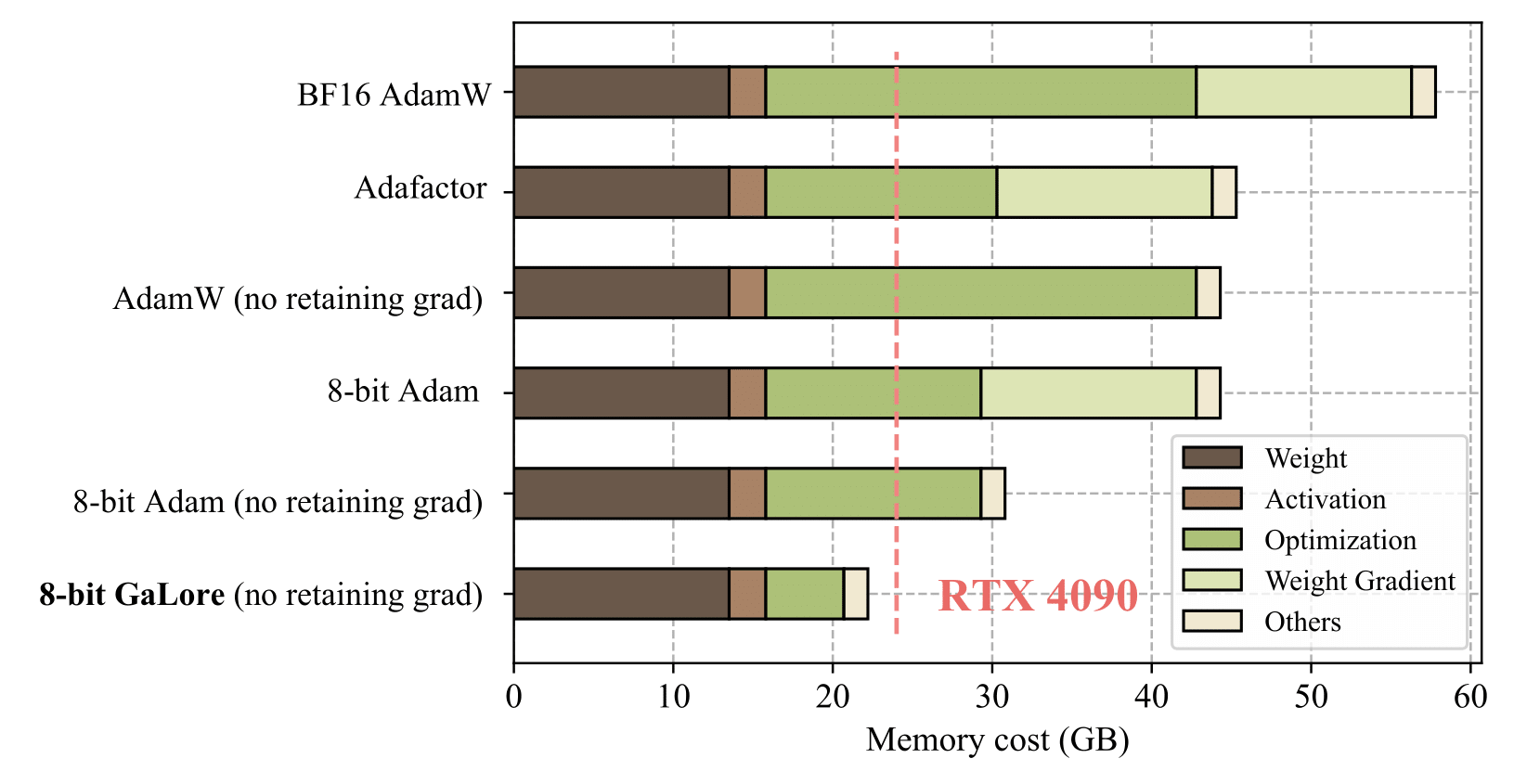

Memory Savings with GaLore

Training large neural networks typically requires storing first- and second-order moments for optimizers, often consuming two to four times the model size in memory. GaLore tackles this by compressing the optimizer states, freeing up significant GPU memory while maintaining training performance.

Key results:

- Reduces optimizer memory usage by 2–4×, enabling training of LLaMA 8B on a single 24GB GPU.

- Matches baseline optimizers like Adam and Adagrad in training performance.

- Lowers the cost of large-scale experimentation, making LLM training more accessible.

How GaLore Works

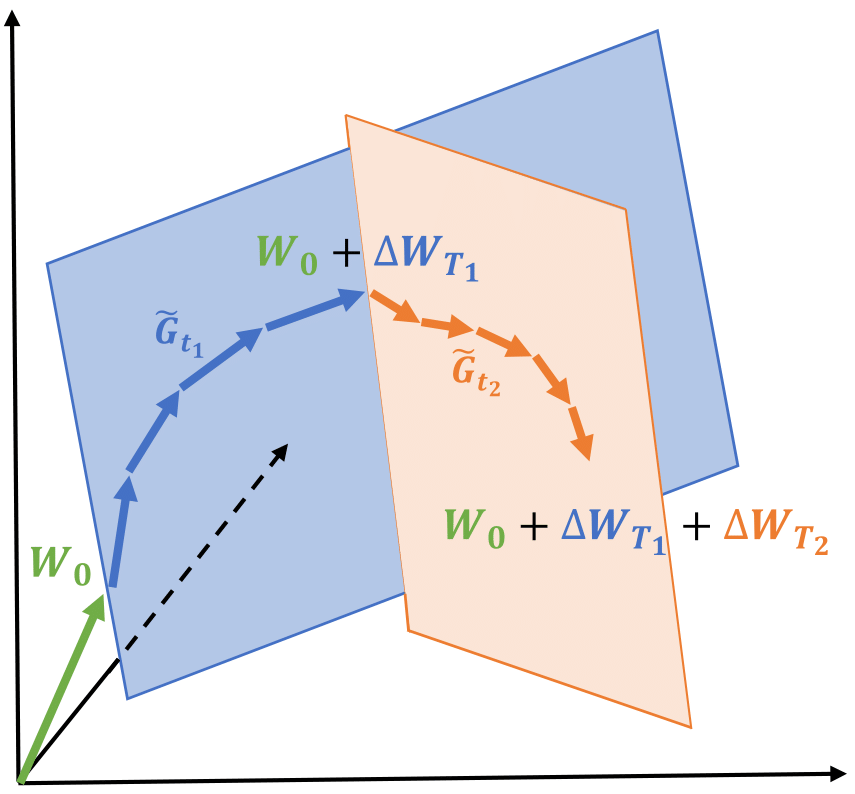

GaLore is built on a simple idea: while large models have billions of parameters, their gradient updates at each step are often highly redundant. Instead of storing and updating full-size optimizer states, GaLore projects gradients into a much smaller subspace, performs optimization there, and then maps updates back to the full parameter space.

Here’s how it works in practice:

- Project gradients: Incoming gradients are compressed into a low-rank subspace, capturing their essential directions with far fewer values.

- Optimization in low-rank space: Optimizers like Adam or Adafactor keep their running statistics in this compact space, drastically reducing memory requirements.

- Project updates back: The low-rank updates are expanded back to full size, ensuring that the model weights evolve as they would with standard training.

To avoid missing important gradient directions over time, GaLore periodically refreshes the subspace based on recent gradient information. This dynamic switching ensures that the optimizer remains expressive while staying memory-efficient.

Pseudocode

# 1) project to low-rank subspace

lor_grad = project(grad)

# 2) optimizer update (e.g., Adam) in low-rank space

lor_update = update(lor_grad)

# 3) project back to full space

update = project_back(lor_update)

weight.data += update

By compressing only the optimizer states, GaLore keeps training behavior close to standard optimizers while significantly reducing GPU memory usage. This approach allows researchers to train multi-billion-parameter models on a single high-end consumer GPU, unlocking large-scale experimentation.

Extensions

- Q-GaLore: Combines low-rank projection with quantization for even greater memory savings.

- Tensor-GaLore: Applies low-rank projection to tensor-structured optimizer states for better scaling.

- GaLore 2: Scales to hundreds of billions of tokens with improved momentum calibration, distributed SVD, and mixed-precision efficiency.